Content Type

Profiles

Forums

Events

Everything posted by pointertovoid

-

Does an introduction text to KDW and KernelEx exist, and where? In a Western European language. Like: - What they do, in their various modes of operation - How they combine with an other, what the alternatives are - How they combine with UURollup, uSp5... - If they allow Xp drivers, possibly Ahci drivers, and if yes, how to integrate them in the W2k installation disk Thanks!

-

Building new system (Windows 2000 compatible)

pointertovoid replied to WinWin's topic in Windows 2000/2003/NT4

I'm a happy user of LGA 775 and P45. My E8600 Cpu is about as fast as any recent one on my single-task applications. Though, WinWin seems to want more cores, for which today's sockets are better. Checked recently that Intel's Panther Point (= 7 series) chipset, meant for Sandy Bridge and Ivy Bridge, is to accept the InfInst 9.3.0.1019. This InfInst (obtained at Gigabyte for a P75 chipset and Xp, 1.2MB) seems to accept W2k officially: - Its Readme tells W2k - Its PantUsb3.cat tells 2:5.00 - obsolete, which I understand W2k is accepted (2:5.1 begin at Xp and so on) - It does offer Usb 3.0 which would then run with W2k officially. - So only the Ahci and Raid would need an adapted driver - BWC of course. - Maybe Intel's Flash cache doesn't work, but who cares if you have an SSD. Did you try it, or have a better rationale? -

Thanks a lot! My apologies for not replying sooner... I'm still not converted to UURollup nor KernelEx - but I should. On a W2k sp4r1 with official updates, I could install MikTeX v2.8 offline (v2.8 being officially the latest for W2k). The full installation regularly fails, but the full installer can provide all options offline. I use it from LyX 1.5.7-1. During install, choose the option "basic installation" (I don't remember the exact name) despite the installer is complete (1GB!); Important: keep the "ask me every time" option to install the missing options of MikTeX; From LyX, ask to recheck the missing options by Tools > Reconfigure; As missing classes are detected, thanks to the "ask me first" option, you can tell once the MikTeX installer folder instead of a Web address; Then at least the usual classes and styles used by LyX install.

-

Thanks a lot! Sorry for not replying sooner... KDW is probably the best solution, especially as time passes, but I'm still not converted to it... Meanwhile I've observed that 1.5.7-1-Installer-Bundle which is the latest among 1.5, available there ftp://ftp.lyx.org/pub/lyx/bin/1.5.7/ installs smoothly on my W2k sp4r1 having Msi 3.1v2 I'll try more recent versions, knowing that 1.6.8 doesn't install.

-

Does this version of BWC's adapted driver work on your disk host? You could look at the identity of the hardware disk host of the Z77 (vendor=8086, chip=nnnn...), possibly on the Internet, and check in the .inf file of the driver if it is included. And (I'm not familiar with nLite, sorry) if you have to indicate the identity of the disk host, is this one right? Because I see a "10R" in a file name, which may suggest the ich10r which is NOT your disk host on the Z77. Could you use an F6 diskette coupled with a standard W2k installation disk?

-

The thread is about the throughput of the Ram on the graphic card.

-

Found some bench comparisons: http://www.xtremesystems.org/forums/showthread.php?192690-4850-750Mhz-vs-4870/page4 http://www.hardwaresecrets.com/article/GeForce-GT-440-512-MB-GDDR5-vs-1-GB-DDR3-Video-Card-Review/1272 http://www.hardware.fr/articles/726-1/b-maj-b-radeon-hd-4800-gddr5-utile.html (Soʁʁy foʁ ze lãguage) These are synthetic figures, meaning that Ram throughput will often have no importance but sometimes make the game lag. Also interesting: more Ram throughput is sometimes more important at higher resolution, sometimes at lower resolution. Apparently, recent drivers or dX can cope with a still slow memory by using the internal cache. This must be delicate programming. More inputs?

-

Hello tinkerers! As graphics cards continue to increase their computing power, their onboard Ram keeps more or less the same throughput... Is that a limit? Or do they now have an integrated cache memory, which the drivers and DirectX uses efficiently? Did you notice a speed difference from different Ram options? Thanks!

-

Hi nice people! Could you confirm that an (Ati) Amd Radeon Hd 6570 works on W2k using a driver adapted by BlackWingCat? What version would it be? The HD 6570 is cited in CX137814.inf of the adapted v12.4 driver there: http://blog.livedoor.jp/blackwingcat/archives/571484.html Shall I add the adapted KB829884-v3 found there: http://blog.livedoor.jp/blackwingcat/archives/1387370.html Thank you!

-

NVIDIA 9500 GT driver for windows 2000?

pointertovoid replied to zuko1's topic in Windows 2000/2003/NT4

[Moved] -

I've already seen a mobo making Ram errors with Ddr400 but running fine with slower ones. I believe to understand - but may be wrong - that its stupid Bios checks only the Ram speed, not the capability of the North bridge, and pretends to set a speed that the chipset cannot sustain. Observations : - Runs with Ddr333 - Doesn't boot with Ddr400. Memtest86+ detects some errors. - Runs if on Ddr333 is added to the Ddr400.

-

I've received and tried my first Hdd with 4kiB sectors, a Hitachi (r.i.p., I had liked them) 7k1000.d of 1TB or Hds721010dle630. Apparently, it always emulates 512B sectors, and the user can't change that. Do you agree? That will be a worry over 2TiB because many Windows can't address so many sectors - a hidden reason for bigger sectors. When formatting it, Ntfs "default cluster size" chose naturally 4kiB, and W2k's Defrag runs on it without complaining. Hitachi provides a software to align existing volumes so they begin at a 4kiB sector boundary instead of sector 63. Important when accessing more than 512B, as it avoids to access one 4kiB sector more just for its last 512B. Typically W2k and Xp would create the first volume at sector 63. Other manufacturers have a jumper that shifts sector numbers by 1 so W2k and Xp would create the first volume at sector 64.

-

I had very slow Explorer operation on a W2k without extensions when volumes had a broken Fat or when new disks were detected but badly installed. This can also happen if one uses badly chosen Compact Flash cards as replacements for mechanical disks. One other cause can be transmission errors over the disk cable and interface.

-

HDD performance <-> Allocation unit size

pointertovoid replied to DiracDeBroglie's topic in Hard Drive and Removable Media

Your figures with random access are now consistent with other disk measurements. I dare to assert that random access measured with IOMeter, within a limited size and with some parallelism, is a better hint to real-life performance than other benchmarks are. Udma is fully useable with Win95 if you provide the driver. Ncq only needs parallel requests which exist already in Nt4.0 and maybe before - but not in W95-98-Me; it also needs adequate drivers wiling to run on a particular OS. Seven (and Vista?) bring only additional mechanisms to help the wear leveling algorithm of Flash media, keeping write fast over time. To paraphrase bphlpt: Windows since 98 does know which files are loaded in what order because a special task observes this. Windows' defragger uses this information to optimize file placement on the disk and reduce the arm's movements. Xp and its prefetch go further by pre-loading the files that belong together before Win or the application request them; this combines very well with the Ncq as it issues many requests in parallel, and partly explains why Xp starts faster than 2k with less arm noise. -

HDD performance <-> Allocation unit size

pointertovoid replied to DiracDeBroglie's topic in Hard Drive and Removable Media

Jaclaz: and why I asked the question in the form: With IOMeter, Ncq effect is very observable; very few other benchmarks show an effect; and the amount of improvement shown by IOMeter is consistent with real-life experience. Just adding some duplicate plethoric redundant repetition again, to confirm we agree... -

HDD performance <-> Allocation unit size

pointertovoid replied to DiracDeBroglie's topic in Hard Drive and Removable Media

I believe to understand that read or write requests to still unaccessed sectors are sent to the disk immediately (unless you check the "delayed write" in the driver properties) but any data read or written stays in the mobo Ram as long as possible for future reuse - that is, as long as Ram space is available. This means that the disk is still updated immediately (if no delayed write). And, yes, I believe the Udma controller accesses any page of the mobo Ram. Which isn't very difficult if you see a block diagram of the memory controller. -

HDD performance <-> Allocation unit size

pointertovoid replied to DiracDeBroglie's topic in Hard Drive and Removable Media

(1) I too understand it as the number of simultaneous requests. 32 is huge, fits servers only. 2 or 4 is already much for a W2k or Xp workstation. (2) IOMeter measures within a file of the size you define, beginning where you wish if possible (I hope it checks it before). The file size should represent the size of the useful part of the OS or application you want to access; 100MB is fine for W2k but too small for younger OS. After the first run begins, I stop it and defragment the volume so this file is contiguous. (3) You lost. 0% random means contiguous reads, telling why you get as unrealistic figures as with Atto. I measure with 100% random, which is pessimistic. You define it by editing (or edit-copy) a test from the list; a cursor adjusts that. (4) Something like "place inline" after the file is uploaded and the cursor is where you want. -

HDD performance <-> Allocation unit size

pointertovoid replied to DiracDeBroglie's topic in Hard Drive and Removable Media

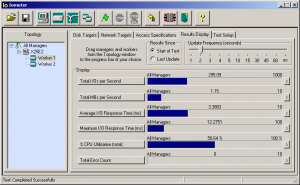

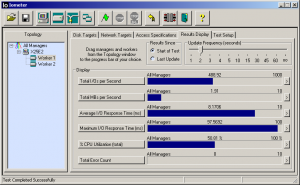

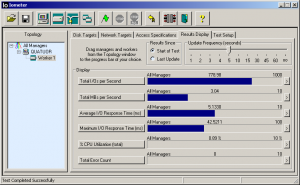

NCQ makes a huge difference in real life. When booting W2k (whose disk access isn't as optimized as by Xp with its prefetch) it means for instance 18s instead of 30s, so Ahci should always be used, even if it requires an F6 diskette. Different disks have different Ncq firmware resulting in seriously different experienced performance, and this cannot be told in advance from the disks' datasheet, nor from benchmarks of access time and contiguous throughput. Anyway, the arm positioning time between near tracks should be more important than random access over the whole disks, which usage never requires. Few benchmarks make a sensible measurement here. Ncq is meaningful only if a request queue is used, but for instance Atto isn't very relevant here; it seems to request nearly-contiguous accesses, which give unrealistic high performance and show little difference between Ncq strategies; nice tool however to observe quickly if a volume is aligned on a Flash medium. The reference here is IOMeter but it's not easy to use, especially for access alignment. Recent CrystalDiskMark tests with Q=32 which is far too much for a workstation (Q=2 to 4). Example of IOMeter with Q=1, versus Q=4 of one 600GB Velociraptor, versus Q=4 of four VRaptors in aligned Raid-0, on random 4k reads over 100MB (but 500MB for the Raid) (an SSD would smash N* 10k IO/s in this test): (log in to see the image, click to magnify) As a practical consequence, an Ssd should be chosen based on few available tests available only from users... IOMeter, if not then CrystalDiskMark which at least tells the random delay for small writes, or good as well: "practical" comparisons at copying or writing many files. On the disks I own, I check the "find" time of some file names among ~100.000 Pdf in many subdirectories, the write time of many tiny files... -

HDD performance <-> Allocation unit size

pointertovoid replied to DiracDeBroglie's topic in Hard Drive and Removable Media

Yes, get an Ssd at least for the OS, your documents, and small applications. They're hugely better than mechanical disks for any data that fits the limited size. Affordable as used disks on eBay: I got a used Corsair F40 (40GB MLC with Sandforce) delivered for 50€, it's excellent. Their efficient use is difficult to understand and implement, and little simple reading is available. Wiki? The buyer-user should understand in advance SLC vs MLC, especially the implications for write delay, and the resulting need for a good cache AND cache strategy, which means a controller and its firmware, all-important with MLC. Older MLC are bad. Write leveling is also very important to understand but not simple, especially as write performance drops over time with older Win. With Seven (or Vista?) and up, write performance shouldn't drop; with Xp, you can tweak the same effect; with W2k I couldn't let these tweaks run - but my disk is an SLC X25-E anyway , nearly insensitive to anything, and so fast that it awaits the Cpu. Alignment is important for speed; some measurements there on a CF card with Ntfs (easy) and Fat32 (needs tweaking). Dandu.be made exhaustive measurements but he didn't tweak alignement, alas. -

HDD performance <-> Allocation unit size

pointertovoid replied to DiracDeBroglie's topic in Hard Drive and Removable Media

Bonsoir - Goedenavond Johan! Yes, this is the way I believe to understand it. Until I change my mind, of course. What Atto could mean with "direct I/O" is that is does NOT use Windows' system cache. The system cache (since W95 at least) is a brutal feature: every read or write to disk is kept in main Ram as long as no room is requested in this main memory for other puposes. If a data has been read or written once, it stays available in Ram. Win takes hundreds of megabytes for this purpose, with no absolute size limit. So the "cache" on a hard disk, which is much smaller than the main Ram, cannot serve as a cache with Win, since this OS will never re-ask for recent data. Silicon memory on a disk can only serve as a buffer - which is useful especially with Ncq. Very clear to observe with a diskette. Reading or writing on it takes many seconds, but re-opening a file already written is immediate. ----- Cluster size matters little as a result of the Udma command. It specifies a number of sectors to be accessed, independently of cluster size and position. A disk doesn't know what a cluster is. Only the OS knows it to organize its Mft (or Fat or equivalent) and compute the corresponding sector address. The only physical effect on a disk is where files (or file chunks if fragmented) begin; important on Flash storage, less important in a Raid of mechanical disks, zero importance on a single mechanical disk where tracks have varied unpredictable bizarre sizes within one disk. -

HDD performance <-> Allocation unit size

pointertovoid replied to DiracDeBroglie's topic in Hard Drive and Removable Media

The contiguous read throughput is essentially independent of the cluster size. Your observations are correct. Files use to be little fragmented on a disk, and to read a chunk of them, the Udma host tells the disk "send me sectors number xxx to yyy". How the sectors are spread along the clusters makes zero difference there. They only tell where a file shall begin (and sometimes be cut if fragmented), and since mechanical disks have no understandable alignment issues, clusters have no importance. This would differ a bit on a CF card or an SSD, which have lines and pages of regular size, and there aligning the clusters on line or page boundaries makes a serious difference. Special cluster sizes, related to the line size (or more difficult, with the page size) allow a repeatable alignment. Exception: the X25-E has a 10-way internal Raid-0, so it's insensitive to power-of-two alignment. So cluster size is not a matter of hardware. Much more of Fat size (important for performance), Mft size (unimportant), and lost space. In a mechanical disk, you should use clusters ok 4kiB (at least with 512B sectors) to allow the defragmentation of Ntfs volumes. -

Last Versions of Software for Windows 2000

pointertovoid replied to thirteenth's topic in Windows 2000/2003/NT4

What Windows binary version of ImageMagick runs on plain vanilla W2k, I mean without Api extensions? v6.3.7 Q16 did; it was brought by LyX 1.5.4. v6.7.7-7, the latest now in June 2012, does not as it was compiled with Visual Studio 2010 (without the proper options). The editor's site tells nothing. Several users asked, and I did insist again there https://www.imagemagick.org/discourse-server/viewtopic.php?f=2&t=20703 A more precise version limit?