Mark-XP

MemberContent Type

Profiles

Forums

Events

Everything posted by Mark-XP

-

My Browser Builds (Part 5)

Mark-XP replied to roytam1's topic in Browsers working on Older NT-Family OSes

I'm in awe of your patience, @VistaLover -

My Browser Builds (Part 5)

Mark-XP replied to roytam1's topic in Browsers working on Older NT-Family OSes

Many thanks @VistaLover for your explanations, @basilisk-dev for resolving the glitch, and @roytam1 for not inheriting it (at the last moment) -

My Browser Builds (Part 5)

Mark-XP replied to roytam1's topic in Browsers working on Older NT-Family OSes

Hello @basilisk-dev, i report an issue here which i found in latest may-releases of 64-bit Basilisk, both Win (Archive) and Linux (x68): the (vertical) tabs on the tools -> preferences page aren't accessible any more! Your December-versions and roytam's mid-april Spt.52.9 versions do work well in this regard. Sorry for posting here: i was kicked out of Pale Moon forums and hadn't much desire since then to post there... Edit: to be more precise: if you close the preferences page and then re-open it, it opens on the priorly selected tab. -

My Browser Builds (Part 5)

Mark-XP replied to roytam1's topic in Browsers working on Older NT-Family OSes

Utilizing Spt & NM approximately 50% for (pre-)listening & collecting music, i do share your disappointment - but not the frustration: i stopped using YT completely (access only directly via youtube-dl), no more soundcloud, never a need for spotify at all, discogs only with noscript activated (works passibly): All albums & tracks i purchase at bandcamp (with nice pre-listening options), directly by the artists or (smaller) labels. By my guess, 99 % of the serious contemporary artists offer their work here (at least in my lucky case ). -

(Not only an exceptional Developer, @Dietmar seems to have a fine sense of humor too!)

-

Let's try to get the Intel LAN I219-V running with XP!

Mark-XP replied to Mark-XP's topic in Windows XP

For i211 this one did work with XP/32 on my rig: https://www.techspot.com/drivers/driver/file/information/15828/ -

Sorry, it was already commented above...

-

or eax, eax - what does that do? Is this ment to initialize the Flags OF, CF or modify the SF, ZF, PF Flag!

-

Interesting: i in contrary did expand the nvhdci.inf because there i found an existing 710 entry, just copied it and replaced the appropriate device-id. Worked nicely!

-

Thank you @AstroSkipper, so i'll give Aomei PAssist a try when having this task the next time!

-

@bluebolt (or anyone else who knows): is Aomei PAssist capable to copy/clone a partition (from one disk) to another existing partition (of a second disk)? Tanks!

-

My Browser Builds (Part 5)

Mark-XP replied to roytam1's topic in Browsers working on Older NT-Family OSes

Indeed, it's mentioned there , parameter is "--no-remote", Is there any difference to "-no-remote" as specified by mozilla (and used by me since ever)? -

It shouldn't be a problem to find latest Intel's Lan driver's for Win7 eg. with google. I utilized this here from Gigabyte - version 20.7 works well.

- 1 reply

-

1

-

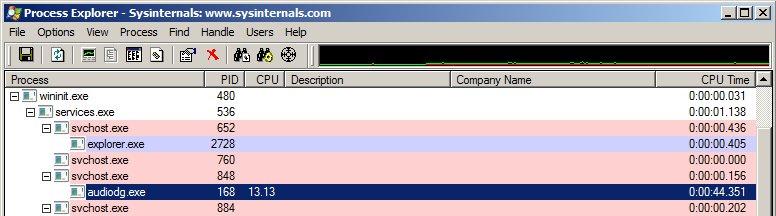

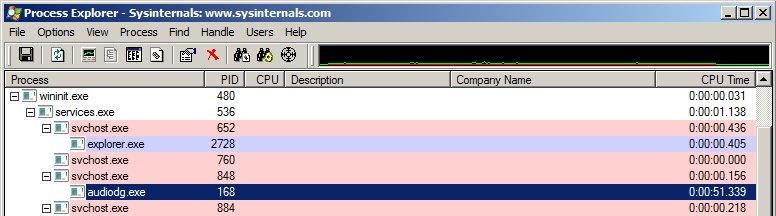

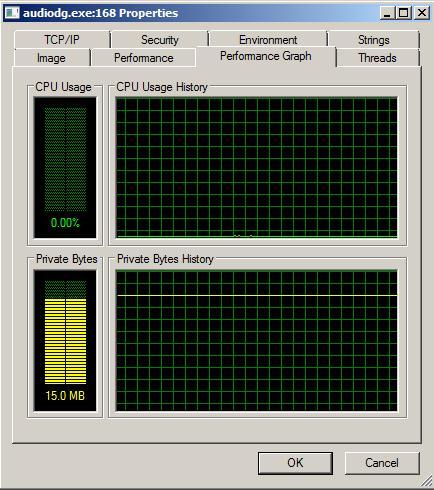

It seems that i am now - 8 years later - confronted with this crap: To get more out of my new motherboard's Realtek 1150 codec i decided to install the WDM driver 2.74 (same as on XP) to replace 7's standard driver (because i noticed that on XP music sounds significantly better...) Now this is what then started to happen sporadically playing music (winamp 2.91): audiodg.exe is turnig to consume up to 15% of CPU for about of minute Afterwards it calms down again: the attack has consumpted 50 CPU-seconds and is behaving normal maybe for the next half hour: Although imo no memory leak at all - 15 MB seem to be quite regular: I'm going to decide this weekend if i can accept that crappy behaviour...

-

Yes, and it's a shame imo: No possibilty to force a suitable interpolated 1440x900 resolution with the integrated intel hd 530 grafics for an old but valuable Eizo S2110W Monitor: The native 1680x1050 is far too small; the offered standard 1280x1024 and 1024x768 inerpolations are kind of useless. What i've tried so far and did not work: - Martin Brinkmann's Custom Display Resolution Utility (XP doesn't support EDID overrides) - The command line utility QRes (mentioned here) - The HKEY_CURRENT_CONFIG\System\CurrentControlSet\Control\VIDEO hack - The Universal VBE Video Display Driver (2015 Version: VBE20, VBE30) that @Dietmar tried on his beloved HP 255 g6 It feels a bit like a sacrilege to add that old 28nm GT-710 (Sorry godfather Kepler) onto my 14nm Skylake rig which runs so godly energy efficient without external grafics. If anyone here knows another approach to enforve a cusom resolution on XP ... Edit: FWiW: a GT-710 vs HD 530 comparison

-

Btw: directly under the 'Open with" entry in the ContextMeny there's entry which i'd translate like "Restore predecessor versions" ("Vorgängerversionen wiederherstellen" in German). Since i deaktivated all ,shadow-technics' in my 7 environment this entry is rather useless and i'd like to remove it from the ContexMenu. What is it's name in english? Did anyone here remove that successfully? Edit: it seems to be "previous versions" and NirSoft's ShellExView should be the tool of the choice,

-

Let's try to get the Intel LAN I219-V running with XP!

Mark-XP replied to Mark-XP's topic in Windows XP

Hello @Dibya, first please some clarifications: - E1000E is the name of the Linux driver for a class of Intel® Gigabit Network NiCs (like th i219). - NetadapterCx is the ,modern' MS driver-model for Win10 and later (in contrary to older ndis-model). - The approach that you refer to above (https://github.com/coolstar/if_re-win) is an (NDIS-type?) wrapper ,around' the NetadapterCx-driver (in that case for a Realtec NiC) compatible with Win-XP (/2000 /Vista). - Your plan now is to write such a wrapper around NetadapterCx-driver for i219. I presume you're mentioning the (linux) e1000e above because you'll use it as a ,bonanza' of ideas and technics, is that correct? What would be the concept then: direct wrapper (NetadapterCx -> NDIS) or detour FreeBSD (NetadapterCx ->FreeBDS -> NDIS)? I've got only basic C knowledge (and even less in driver-implementations) to support, but within 2 or 3 weeks i'll migrate to the (above mentioned Z170) system with an i219 and will be able to test. And - if that's of any interest or advantage - it will house a Debian partition too. And beside me, i'm quite sure there will be some others here (with an idled i219) eager for testing...