nmX.Memnoch's Achievements

0

Reputation

-

You'll need to create a separate backup schedule for the second drive...even though you're backing up the same information. Windows Backup in Server 2008 detects the differences in the destination. Even though the drives are named the same, they have a different serial number and Windows Backup sees that.

-

Using externel drives at the very least you should be using Windows Backup to get the System State Data (this will get the registry, AD database and other system related files). Windows Backup will also get any data from file shares. If you're using SQL Server it has a built-in backup function and you can have it automatically put the files on the external rig. Windows Backup in Server 2008 and 2008 R2 is quite a bit different from what you were used to with Server 2003.

-

cluberti has already touched on many of the points on why you wouldn't do this on a lower-end enclosure like this. The only thing I want to do is explain why you're seeing the dropped performance. When you had the VMs on the internal storage each drive had it's own SATA connection for communicating with the host controller. This meant that reads or writes happening to multiple drives at the same time had dedicated channels for each drive. When you moved to the Drobo S the configuration changed so that all of the drives are funneled through a single connection to the host controller. You drastically reduced the overall bandwidth available to the drive subsystem by doing this. Moving to the Drobo FS or even Drobo Pro isn't really doing to solve this problem. The Drobo Elite may be a slightly better solution since it has dual iSCSI connections, but I don't think it'll be as good as your internal drive solution was. Is this for a single server or are you trying to move to a clustered Hyper-V solution? For a single server there's really no good reason, other than adding spindles, to move to external storage. Adding more spindles doesn't do much good if you don't have the proper connection between the server and the storage because you can't take advantage of the additional throughput. And, as you found out, the wrong connection type can actually make performance worse.

-

On Windows client OS's (XP, Vista, 7) you're allowed up to 10 connections to an SMB share. You're getting close to that limit. Windows Server 2008 R2 will open up a lot more doors for you. You probably need to get your data over onto some hardware with redundancy anyway so a server wouldn't be a bad idea. In your situation I would look at either a Dell PowerEdge T310 or T610 and use Hyper-V 2008 R2. The T610 has a higher memory capacity which means you can run more VMs with sufficient memory allocation for each one. You may not need that many VMs to start with but it'll also give you room to grow. Never purchase a server for what you need now, purchase it with room for growth because technology and requirements constantly change. At the same time you don't want to overbuy either though. That balancing act is a fine art. At that point you could implement Active Directory, folder redirection for things like My Documents and Favorites, server-side shares, etc. Your environment is small so it wouldn't be overly complicated to manage. In all honesty, it will probably simplify some of the things you currently do (Group Policy alone will do that for you). Using Hyper-V 2008 R2 you do have licensing considerations for each virtual machine, but your environment is small enough that you could start with Server 2008 R2 Standard (with the exception maybe of your Certificate Authority...there are some certificate templates that aren't available on Standard). DirectAccess rocks! We've just implemented it at work and our users LOVE not being required to initiate the VPN connection. It just works. The one thing I will warn you about is that unless your internal network is native IPv6 you won't be able to use just the DirectAccess feature built into Server 2008 R2. We ended up using ForeFront Unified Access Gateway. There are several other requirements such as two network connections on the server, two consecutive public IP addresses that are NOT NAT'ed, a Certificate Authority (which is easy to setup, but DON'T put it on a Domain Controller), an internal only website, and Windows 7 on the clients (it's supposed to work on XP and Vista but we're moving everything to 7 so I haven't investigated that). UAG DA may not be worth the extra cost for only four users. If you already have a VPN solution you'll definitely want to weigh those options. Short answer: No. This can be done using either a Remote Desktop Services, Citrix or Microsoft App-V...all which require a server. You still have to purchase CALs for each user that accesses Office. Again, your environment is small enough that it doesn't justify the cost and it really won't simplify things that much (it'll actually add complexity).

-

You really need some managed switches. I'll go back to the Extreme switches again. They have a feature called ELRP--Extreme Loop Recovery Protocol. It's not on by default but once configured it will detect a loop and automatically disable the looped ports. If you have their management app--EPICenter--you can configure it to forward switch events to you via email and/or text message so you'll know immediately when that loop is created and which ports it was created on. It also helps to be able to disable ununsed ports so someone can't just walk up and plug in.

-

Your issue sounded like a loop got created! I was kinda reading through the earlier posts in this thread. Have you replaced those switches with managed switches yet? We use Extreme Networks switches. Very fast, very easy to configure and not nearly as expensive as other options (yes, still expensive, but not nearly as much as other alternatives). If you aren't doing any VLAN management (which you currently aren't since they're unmanaged switches) and don't need Layer 3 managed switches you can look at their X150 (10/100) and X350 (Gigabit) line. They're Layer 2 only and can't be stacked, but they're also much cheaper. We use a combination of X450's, X250's and X150's.

-

It very likely is password complexity but you usually get a message that says "the password does not meet the complexity requirements...". If this is the same server as your other thread you've got some other issues going on that we need to figure out. First, you mentioned that the preferred DNS server is 127.0.0.1. What other DNS servers do you have configured in the network properties?

-

Yes it sometimes "just feeds to an internal SATA port" through a cable. However, for fully compliant eSATA operation you need to make sure the ports/controllers are designed for eSATA compliancy. What I mean by that is they're designed for hot swapping SATA drives. More specifically, SATA drives in fully compliant eSATA enclosures. The next eSATA standard is also going to call for power-over-eSATA so that you don't have to carry around a power brick with your drive. I imagine this will only work for 2.5" drives, but full details on the new spec haven't been release (or I haven't seen them yet anyway). http://www.sata-io.o...ology/esata.asp Unfortunately, the SATA specification is a moving target and not all features are required to be supported by the controller. This is why when SATA300 was first introduced you had to figure out if your controller AND drive supported NCQ or not. As for FW800, I wouldn't bother. Support is spotty at best and not very many devices will support it. Most external hard drive enclosures only support FW400, if they include FW connectivity at all. You also don't want to do FW800 over a PCI bus. I'm not even sure that you can find a FW800 card that's PCI only. They're usually 64-bit PCI, PCI-X or PCI Express. Transferring data to or from two FW800 devices at the same time over the PCI bus will saturate the bus completely. The PCI bus is 133MB/s shared with all PCI devices. A single FW800 connection is 100MB/s max theoretical. It would require 200MB/s (again, theoretical maximum, probably more like 150-170MB/s) to do two simulitaneous FW800 transfers.

-

Back in the later days of 72-pin SIMMs there were systems that required them to be installed in pairs so using sticks in pairs has been around much longer than RAMBUS. RAMBUS was just the first one to optimize how they worked in pairs...at the desktop level. Servers have also required memory be installed in pairs (and sometimes quads) for quite some time. The ServerWorks chipset in his server will actually using "memory interleaving" (aka dual-channel). It sucks that Broadcom let ServerWorks drop off after they bought them because they used to make some awesome chipsets...even if their PATA controllers sucked (you were generally using SCSI based drives on them anyway so that didn't matter). Using multiple DIMMs at the desktop level is another case of server technologies trickling down. This always happens. Look at the number of consumer-level motherboards that come with integrated RAID now days.

-

There's one in Phoenix on the 22nd of April. https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032446379&Culture=en-US Click on the "Dates don't work?" link on the page you linked.

-

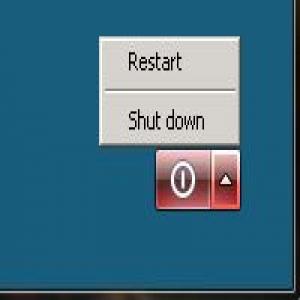

Server 2008 r2 shutdown button missing from start menu

nmX.Memnoch replied to a topic in Windows Server

The Shutdown and Restart options don't appear through RDP sessions. This was done to prevent accidental shutdowns or restarts of servers. Select the "Windows Security" option from the Start Menu. Once you do that the option to Shutdown or Restart is available in the lower right-hand corner. See the attached screenshots (taken from a Server 2008 R2 RDP session). -

Unable To Connect Online With Fresh Install

nmX.Memnoch replied to Redhatcc's topic in Windows Server

What are you using for DNS servers? Post the full settings of IPCONFIG from your 2008 box and from one of your workstations. -

Only the keyboard and mouse is USB. The video is not. Yes, I understand that. However, if you look at the "Sessions" column of the "Users" tab in Task Manager you will see that all of your "consoles" are indeed RDP sessions. That's why I called it "RDP over USB", with the obvious exception that video is done over a VGA/DVI/HDMI/DP connection. My point being that Aero doesn't work over RDP, which is why you don't get the full Aero interface on each console. Totally disagree about that. Disagree if you will but the recommendation still stands, and for very good reason. I would hope that you didn't stop reading there and read the rest of the post on why I recommended it. Implementing RAID isn't as daunting/difficult as it used to be (not that it ever really was with the right controller). Using RAID isn't always about providing speed improvements either. In this case it would be more about providing better uptime. While RAID isn't total protection, it certainly minimizes the impact of a drive failure. If your company can afford to pay those employees to sit idle while the system is being repaired (read: reinstalled and reconfigured) then I guess you don't really need to implement it. Most companies would prefer not to have one employee sitting idle due to a hardware failure, let alone several just because one system failed.

-

Are you talking about capturing and restoring SCCM images? There's a workaround but I don't have the information readily available right now...

-

If I'm not mistaken removing it from the domain won't delete the shares. I know for a fact it won't change the NTFS permissions and I'm pretty sure it won't change the share permissions either. So when you join it back to the domain the server should be able to resolve the SID information from domain accounts again. Aside from that...yeah, you do need to figure out why the trust relationship broke in the first place.